In this article we will cover:

- ETL pipeline explained

- Benefits of ETL pipelines

- How to implement an ETL pipeline in a business

- Automating some of the ETL pipeline steps

- ETL pipeline FAQs

ETL pipeline explained

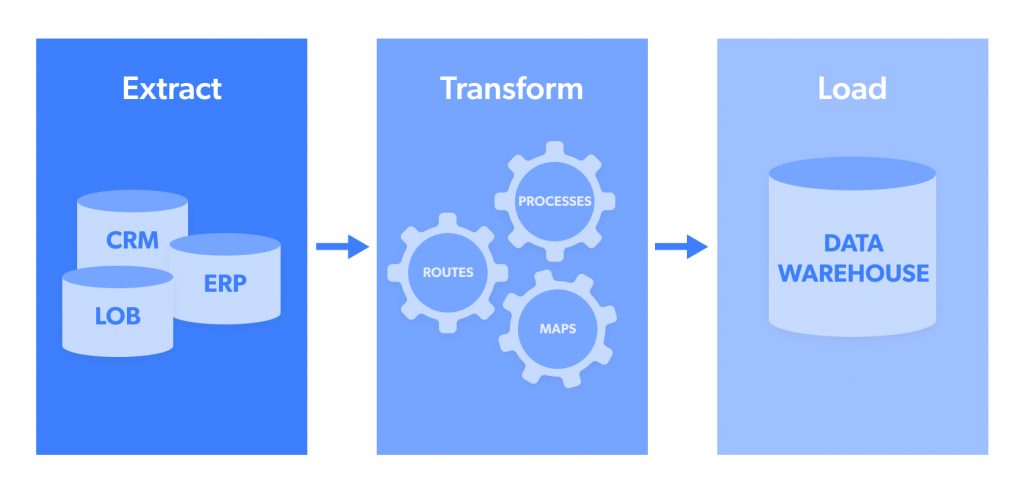

ETL stands for:

- Extract: This is the data extraction stage from a source or data pool such as NoSQL database or an open-source target website such as trending posts on social media.

- Transform: Extracted data is typically collected in multiple formats. ‘Transformation’ refers to the process of structuring this data so that it is in a uniform format that can then be sent to the target system. This may include formats such as JSON, CSV, HTML, or Microsoft Excel

- Load: This is the actual transfer/upload of data to a data pool/warehouse, CRM, or database so that it can then be analyzed and generate actionable output. Some of the most widely used data destinations include webhook, email, Amazon S3, Google Cloud, Microsoft Azure, SFTP, or API.

Things to keep in mind:

- ETL pipelines are especially suitable for smaller datasets with higher levels of complexity.

- ‘ETL pipelines’ are often confused with ‘Data Pipelines’ – the latter is a broader term for fully-cycle data collection architectures, whereas the former is a more targeted procedure.

Benefits of ETL pipelines

Some of the key benefits of ETL pipelines include:

One: Raw data from multiple sources

Companies that are looking to grow rapidly can benefit from strong ETL pipeline architectures in the sense that they can broaden their scope of view. This is accomplished as a good ETL data ingestion flow will enable companies to collect raw data in various formats, from multiple sources, and input it into their systems efficiently for analysis. This means that decision-making will be much more in touch with current consumer/competitor trends.

Two: Decreases ‘time to insight’

Just like with any operational flow, once it is set into action – the time from initial collection to actionable insight can be reduced considerably. Instead of having data experts manually review each dataset, convert it to the desired format, and then send it to the target destination. This process is streamlined, enabling quicker insights.

Three: Frees up company resources

Feeding off of this last point – good ETL pipelines work to free up company resources on many levels – this includes freeing up personnel. Companies actually:

“Spend over 80% of their time on cleaning data in preparation for AI usage”.

Data cleaning in this instance, among other things, refers to ‘data formatting’, something that solid ETL pipelines will take care of.

How to implement an ETL pipeline in a business

Here is an eCommerce use case that can help illustrate how an ETL pipeline can be implemented in a business:

A digital retail business needs to aggregate many different data points from various sources in order to remain competitive and appealing to target customers. Some examples of data sources may include:

- Reviews left for competing vendors on marketplaces

- Google search trends for items/services

- Advertisements (copy + images) of competing businesses

All of these data points can be collected in different formats such as (.txt), (.csv), (.tab), SQL, (.jpg) and others. Having target information in multiple formats is not conducive to the business goals of the company (i.e. deriving competitor/consumer insights in real-time and making changes to capture higher amounts of sales).

It is for this reason that this eCom vendor may choose to set up an ETL pipeline that converts all of the above formats into one of the following (based on their algorithm/input system preferences):

- JSON

- CSV

- HTML

- Microsoft Excel

Say they chose Microsoft Excel as their preferred output format to display competitor product catalogs. A sales cycle and production manager can then quickly review this, and identify new products being sold by competitors that they may want to include in their own digital catalog.

Automating some of the ETL pipeline steps

Many companies simply do not have the time, resources, and manpower to manually set up data collection operations, as well as ETL pipeline. In these scenarios, they opt for a fully automated web data extractor tool.

This type of technology enables companies to focus on their own business operations while leveraging autonomous ETL pipeline architectures developed and operated by a third party. The main benefits of this option include:

- Web data extraction with zero infrastructure/code

- No additional technical manpower needed

- Data is cleaned, parsed, and synthesized automatically and delivered in a uniform format of your choice (JSON, CSV, HTML, or Microsoft Excel) – This step is the ETL pipeline replacement which is taken care of automatically

- The data is then delivered to the company-side consumer (e.g. a team, algorithm or system). This includes webhook, email, Amazon S3, Google Cloud, Microsoft Azure, SFTP, or API.

In addition to automated data extraction tools, there is also an efficient and useful shortcut that not many people know about. Many companies are speeding up the “time to data insight” by getting rid of the need for data collection, and ETL pipelines entirely. They are doing this by leveraging the power of ready-to-use datasets that are already uniformly formatted and delivered directly to in-house data consumers.

The bottom line

ETL pipelines are an effective way to streamline data collection from multiple sources, decrease the amount of time it takes to derive actionable insights from data, as well as freeing up mission-critical manpower, and resources. But despite the efficiencies that ETL pipelines offer, they still require quite a bit of time and effort to develop and operate. It is for this reason that many businesses choose to outsource and automate their data collection and ETL pipeline flow using tools such as Bright Data’s web scraping tool. Contact us to find the ultimate solution for your data project.

ETL pipeline FAQs

ETL stands for Extract, Transform, and Load. It is the process that enables taking data from multiple sources and uniformly formatting them for ingestion by a target system or application.

Loading is the final step in the ETL process which entails uploading the data in a uniform format to a data pool or warehouse so then it can be processed/analyzed/have insights derived from it. The main three types of loads include 1. Initial loads 2. Incremental loads 3. Full refreshes

Yes, building an ETL pipeline with Python is indeed possible. In order to accomplish this, various tools are necessary, including ‘Luigi’ in order to manage workflow, ‘Pandas’ for data processing, and movement.